“Agentic AI” shows up everywhere right now. Job posts, product demos, investor decks. And it can feel slippery because people use it to mean everything from “chatbot with buttons” to “robot that replaces your team.”

Here’s the clean version: agentic AI describes AI systems that don’t just answer. They pursue a goal through multiple steps, they take actions using tools, and they adjust based on what happens. Practical. Measurable. Sometimes a little scary.

Agentic AI, explained in plain English

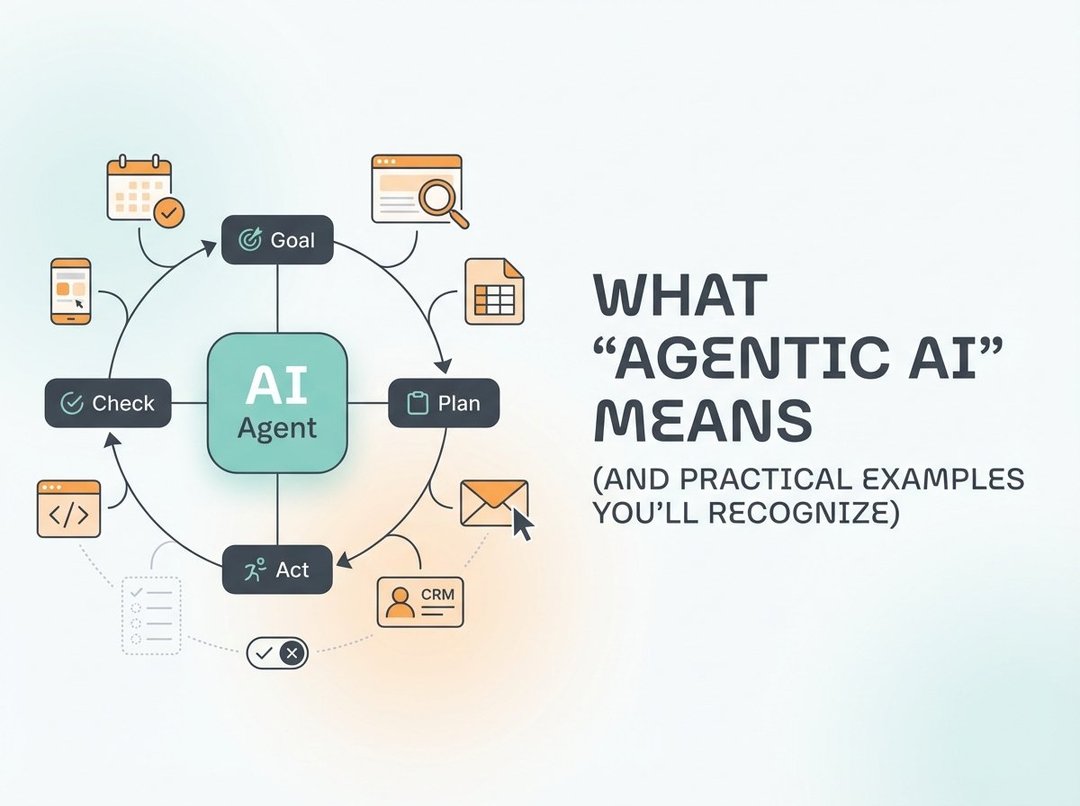

A good working definition goes like this: agentic AI is goal-directed AI that can plan, act, and self-correct with some autonomy. Autonomy matters here. Not because it’s “alive” or “conscious” but because it changes the shape of the work.

A normal chat experience stays inside the conversation. You ask. It responds. An agentic AI system tries to move the world a notch. It might open a calendar, search the web, run code, update a CRM, or draft an email. Then it checks whether that action worked and decides what to do next.

If you want a sticky mental model, use this loop:

- Goal: “Book the cheapest nonstop flight under $500.”

- Plan: break the job into steps.

- Act: use tools to execute steps.

- Check: verify results against the goal.

- Repeat: iterate or ask for help when stuck.

That loop is the heartbeat of agentic workflows.

What agentic AI is not (so you can ignore the noise)

People often call something “agentic” when it just feels busy. That creates confusion and bad expectations.

It’s not a chatbot with a long prompt

A long prompt can simulate planning. It can even output steps that look smart. But if the system can’t take actions in a real environment, it’s still just text. No tool use. No feedback. No real agency.

It’s not “automation, but cooler”

Classic automation runs on fixed rules. If X happens then do Y. It shines when the world stays predictable.

Agentic AI works better when the world stays messy. Requirements shift. Data arrives late. Pages change. Tools fail. The agent can adapt at runtime. That flexibility also introduces new failure modes.

It’s not AGI

Agentic AI does not imply human-level reasoning. It does not imply accountability. And it definitely does not mean you should let it loose with admin permissions.

The key building blocks inside agentic AI systems

Most agentic AI systems rely on the same components. Once you see them, product demos start making more sense.

Goals and constraints

An agent needs a target. It also needs walls.

Goals can come from you. They can come from a task template. They can come from a system objective like “resolve low-risk tickets fast.”

Constraints keep it from doing something “technically successful” but practically awful. Think budgets, time limits, privacy rules, and scope boundaries. For example, “You may draft replies. You may not send them.”

Planning and task decomposition

Agentic AI breaks “do the thing” into steps. That sounds simple until you watch it happen.

“Plan a birthday party” becomes venue, guest list, schedule, shopping, invitations, reminders, and backups. Planning matters because multi-step tasks fail in the seams. A decent plan reduces seams.

Tools and environments

Tools are where agency becomes real.

A tool can be a browser. It can be a spreadsheet. It can be an API into a business system. It can be a code runner. The “environment” is anything the agent can observe and change through those tools.

This is also where risk shows up. More tool access increases usefulness. It also increases blast radius.

Memory and context

Some agentic AI systems store state across steps. Some store preferences across sessions. Both can help a lot.

But memory cuts both ways. It can go stale. It can store sensitive details. And it can create a false sense of “it knows me” when it only knows a snapshot.

Evaluation and self-checking

Good agents verify. They don’t just proceed.

They cross-check sources. They run tests. They compare outputs to constraints. And they pause before irreversible actions. Verification does not guarantee correctness. It just reduces unforced errors.

Practical examples of agentic AI you’ll actually run into

“Agentic” can sound abstract until you picture the behaviors.

Everyday agentic AI examples

1) Travel planning agent

You give dates, budget, and preferences. It searches options, compares tradeoffs, drafts an itinerary, and holds times on your calendar. It asks before booking. That approval step is the difference between helpful and hazardous.

2) Inbox triage agent

It labels messages, summarizes threads, drafts replies, and creates follow-ups. A sensible constraint keeps it from sending anything without confirmation.

3) Shopping comparison agent

It tracks prices, compares specs, and surfaces the real differences. Not “this one is best.” More like “this one saves money but sacrifices battery life.”

Work examples that businesses deploy

Customer support agent-assist pulls policy snippets, drafts responses, and suggests next actions. It escalates when confidence drops or the case looks risky.

Sales research agents assemble account briefs from public sources, propose outreach angles, and list likely objections. They save time on the boring parts, which is the point.

Engineering helper agents reproduce bugs, propose patches, draft pull requests, and run tests. They can accelerate the loop if you keep them inside guardrails.

How to tell if something is truly agentic AI

Here’s a fast checklist. If the system does most of these, it earns the label agentic AI.

- It pursues a goal across multiple steps.

- It uses tools to take real actions.

- It observes outcomes and adapts.

- It asks for approval when consequences are permanent.

- It can explain its plan at a high level.

You can also think in “levels of agency”:

- Level 0: answers only.

- Level 1: suggests actions.

- Level 2: takes actions with approval.

- Level 3: takes bounded actions autonomously with monitoring.

Most sane deployments live in Level 2. Some reach Level 3 in narrow workflows.

Risks and limits, plus the guardrails that help

Agentic AI fails differently than chatbots because mistakes compound across steps.

Common problems include tool misuse, brittle plans, hallucinated facts, and quiet drift from the original goal. Security risks matter too. Prompt injection can trick an agent that browses the web. Over-permissioned tools can turn a small error into a big incident.

The practical mitigations look boring. That’s why they work.

- Least privilege: give minimal tool permissions.

- Human approvals: require confirmation for sends, deletes, refunds, and purchases.

- Sandboxing: isolate risky actions in safe environments.

- Logging and audit trails: make actions reviewable and reversible.

- Budgets and rate limits: cap time, money, and API calls.

For deeper reading on safe AI deployment practices, start with NIST’s AI Risk Management Framework: https://www.nist.gov/itl/ai-risk-management-framework

When to use agentic AI, and when to skip it

Agentic workflows shine when goals are clear, tools are available, and you can verify outcomes. They struggle in high-stakes areas without strong checks, or where accountability must stay strictly human.

Use agentic AI for “messy but reversible” work. Skip it for “one mistake ruins the week” work.

A safe way to try agentic AI this week

Pick one small goal. Something like “organize my inbox into three folders” or “draft a project plan from these notes.”

Then do this:

1) Ask for a plan before any action.

2) Start with read-only access.

3) Require approval for anything irreversible.

4) Review what it did and why.

That’s the real promise of Agentic AI. Not science fiction. Just systems that can plan and act, while you keep the steering wheel.