AI in early 2026 feels oddly calm on the surface. Models keep getting better, demos keep going viral, and yet a lot of teams sound… tired. Not because AI stopped working. Because the real work shifted. Today’s state of AI 2026 rewards people who can pick the right model, control costs, and ship systems that behave under pressure.

This post breaks down the early 2026 AI model landscape, the cost mechanics that actually move your bill, and what’s improving fast enough to change architecture decisions.

What the “State of AI 2026” Actually Means

When people say “the state of AI 2026” they often mean “which model is best.” That framing is dated. In early 2026, capability is broadly available. Predictability is not.

Think about it this way. Plenty of cars can hit 150 mph. The thing you care about is whether the brakes work in the rain.

In practice, the state of AI in early 2026 has three layers:

- Foundation models: general reasoning, coding strength, multimodal understanding.

- System layer: retrieval, tool orchestration, memory, evaluation, safety constraints.

- Operations layer: cost controls, caching, routing, observability, compliance posture.

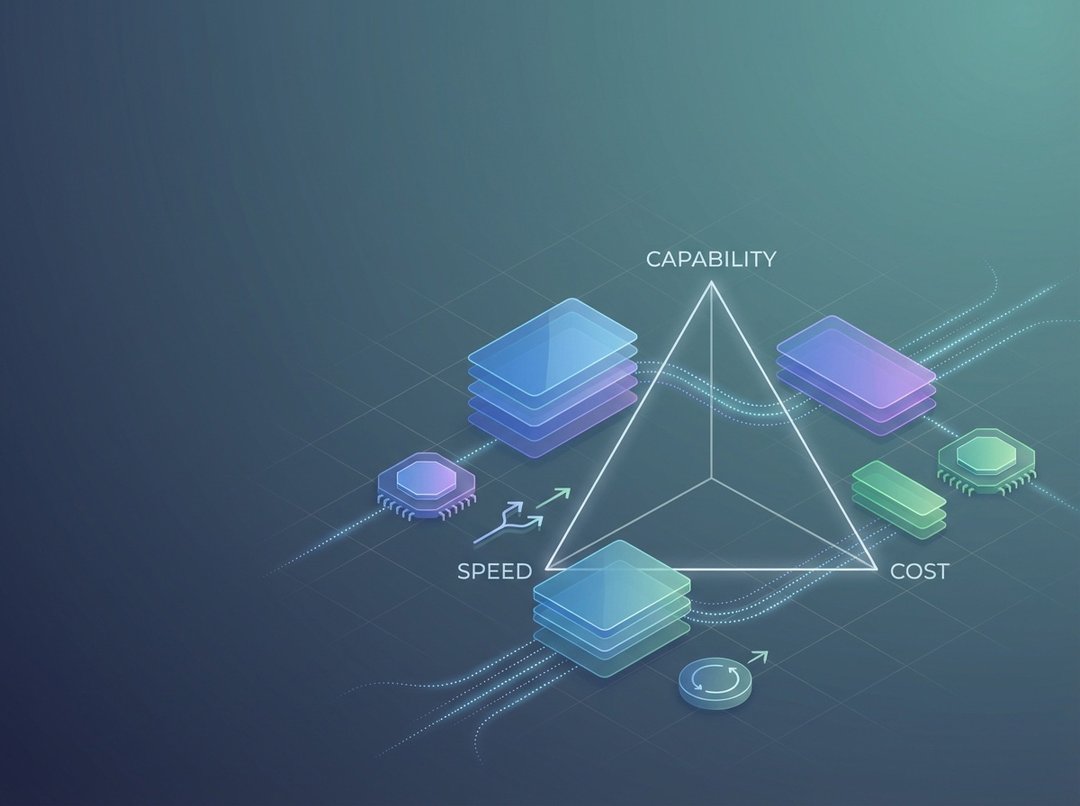

And all of it collapses into one trade space: intelligence, speed, and cost. Push one corner hard and you pay somewhere else.

The Model Landscape in Early 2026

The early 2026 story is not “one model to rule them all.” It is segmentation. Models specialize by latency profile, price curve, and how reliably they follow constraints.

Frontier generalists: expensive competence that still matters

Frontier generalist models remain the best choice when you need reasoning depth plus broad capability. They shine in tasks where “close enough” fails quietly.

Use cases that justify them in the state of AI 2026 include:

- Difficult debugging with many interacting causes.

- Planning across multiple tools and brittle APIs.

- High-stakes writing where consistency matters across long outputs.

But you pay for it. Not only in token cost, also in latency and orchestration overhead when you add retries and verifiers.

Fast small models: the production workhorses of the state of AI 2026

Smaller models keep improving. They also keep dropping in price. That combination makes them the default for a surprising amount of real work.

They perform well for:

- Classification and routing.

- Extraction into strict schemas.

- Draft generation that downstream rules will constrain.

- “First pass” summarization before escalation.

Here’s the mindset shift. You do not need a model to be brilliant if the system forces correctness. You need it to be cheap, fast, and consistent.

Reasoning-first modes versus standard chat modes

A major split in early 2026 is between standard generation and explicit reasoning modes. Some providers bill “thinking” or reasoning tokens as part of output pricing. That changes the economics.

Reasoning modes often pay off when tasks have many failure points:

- Tool-driven workflows with branching logic.

- Long-context reconciliation where contradictions hide.

- Multi-step synthesis that benefits from internal scratch work.

If the task is a simple extraction, reasoning tokens can become an elegant way to burn money.

Open weights and on-prem: not the default, still strategically useful

Open-weight models remain relevant when constraints matter more than convenience. Data residency requirements, offline environments, and ultra-low latency can justify self-hosting.

But do not confuse “no API bill” with “low cost.” You inherit:

- Serving infrastructure and GPU scheduling.

- Model updates and regression testing.

- Safety tuning and incident response.

In early 2026, open models work best when you have a stable workload and strong internal platform muscle.

AI Costs in 2026: Token Pricing Is the Surface

Token pricing looks simple until it meets production traffic. The bill comes from system design decisions, not marketing pages.

The real cost model for AI in early 2026

At minimum, your cost per request includes:

- Input tokens: system prompts, user input, retrieved context, tool schemas.

- Output tokens: the final response and any structured output.

- Reasoning tokens: often folded into output pricing for “thinking” modes.

- Caching behavior: whether repeated prefixes get discounted.

- Batching: whether you can process asynchronously at lower rates.

A better metric than cost per call is cost per successful task. A cheap model that fails twice is expensive.

Anchor pricing to official sources

Pricing changes quickly. Use official pages as your baseline for the state of AI 2026 cost discussion:

- OpenAI pricing: https://openai.com/api/pricing/ and https://platform.openai.com/docs/pricing

- Anthropic Claude pricing: https://platform.claude.com/docs/en/about-claude/pricing

- Google Gemini API pricing: https://ai.google.dev/gemini-api/docs/pricing

- Amazon Bedrock pricing overview: https://aws.amazon.com/bedrock/pricing/

Two patterns stand out across vendors. First, batch processing commonly offers large discounts. Anthropic documents a 50% Batch API discount. Google documents batch pricing reductions for certain models. AWS Bedrock describes batch inference at 50% lower price for select models. Second, long context can trigger premium tiers. Anthropic documents higher rates above a 200K input threshold for specific long-context configurations.

Why costs spike: the hidden multipliers

Most teams do not blow budgets because the model is “too expensive.” They blow budgets because tokens bloat.

Common culprits:

- Over-retrieval in RAG. You fetch ten chunks when two would do.

- Verbose system prompts that grow like ivy.

- Tool schemas re-sent every turn.

- “Safety padding” that doubles context windows.

Then you add operational multipliers. Retries without backoff. Two models answering the same question for consensus. No caching on stable prefixes. Suddenly your neat spreadsheet estimate looks like a prank.

A practical cost-control stack that works in 2026

The simplest sustainable pattern looks like this:

- Route first: small model decides task type and risk level.

- Retrieve second: cap retrieval, dedupe aggressively, and compress context.

- Escalate only when needed: reserve frontier reasoning for hard cases.

- Verify: a lightweight checker catches schema breaks and obvious errors.

- Cache: store stable prefixes and long instruction blocks.

Conceptual diagram, end to end:

Ingress → Router model → (Optional) Retrieval → Main model → Verifier → Post-processor → Logs and eval store

It is not glamorous. It is how teams win.

What’s Improving Fast in the State of AI 2026

Progress is not uniform. Some areas are sprinting. Others crawl.

Tool use reliability is getting less fragile

Tool calling has moved from “sometimes it works” to “usually it works if you constrain it.” Models increasingly adhere to schemas and recover from tool errors without spiraling.

Consequently, you can simplify orchestration. You rely less on defensive prompt gymnastics. You also reduce retries which hits cost and latency directly.

Long context is more usable, not magically perfect

Context windows keep expanding, but bigger is not the same as better memory. Long context helps when you pair it with structure: chunking, retrieval discipline, and caching.

Still, long context creates its own failure mode: context dilution. The model sees everything and therefore commits to nothing.

Multimodal is moving from “cool” to operational

Image and document understanding now support real workflows: support triage, QA review, and light compliance checks. Video remains costlier and slower, but summarization and indexing pipelines keep improving.

The hard part is evaluation. Multimodal ground truth is expensive to create. Without it, teams argue from vibes.

Agentic workflows are maturing with constraints

Agents work when you treat them like constrained processes, not autonomous coworkers. The winning setups use explicit termination rules, budgets, logging, and replay.

The losing setups let the agent “think” forever. It feels intelligent. It ships nothing.

How to Choose Models in Early 2026

Model choice in the state of AI 2026 is an engineering decision, not a personality test.

Pick the cheapest model that meets a measurable bar. Then prove it.

A clean selection loop:

- Define success criteria in plain language.

- Build a small eval set from real tasks.

- Baseline a fast model.

- Escalate only when metrics demand it.

- Re-test after every prompt or retrieval change.

Evaluate on four dimensions:

- Quality: grounded accuracy and schema validity.

- Latency: p50 and p95 under realistic concurrency.

- Cost: per successful task with retries included.

- Risk: data exposure, jailbreak resilience, auditability.

Where the State of AI Is Headed After Early 2026

Expect price pressure at the bottom and segmentation at the top. Fast models will keep getting cheaper. Frontier reasoning will stay premium, but systems will route to it more selectively.

Furthermore, more competitive advantage will come from the system layer. Evals. Routing. Data curation. Observability. The “state of AI 2026” is already drifting from model worship toward operational discipline. That trend will harden.