OpenClaw’s rapid rebrand: from Clawdbot to Moltbot to OpenClaw

OpenClaw is the latest name for the viral personal AI assistant that originally launched as Clawdbot, briefly became Moltbot, and has now landed on OpenClaw. The earlier rebrand was triggered by a legal challenge from Anthropic (maker of Claude), but the shift to OpenClaw wasn’t prompted by Anthropic.

This time, the creator, Austrian developer Peter Steinberger, took a more deliberate approach—researching trademarks and even asking OpenAI for permission “just to be sure,” specifically to avoid copyright issues from the start. The “molt” theme didn’t disappear, though. Steinberger framed the evolution with a lobster metaphor: “The lobster has molted into its final form,” tying the project’s identity to growth and transformation.

Why OpenClaw exploded: open source momentum and community scale

Even with the naming turbulence, OpenClaw’s popularity has been hard to ignore. In roughly two months, it attracted over 100,000 GitHub stars, a public signal that developers are watching, forking, and building around it at breakneck speed.

Steinberger has been clear that the project is no longer something he can carry alone. He described it as having “grown far beyond what I could maintain alone,” and he added “quite a few people from the open source community” to the maintainer list. That shift matters because OpenClaw isn’t positioned as a small demo—it’s aiming at something much bigger: a personal AI assistant that can run on your own computer and operate from the chat apps you already use.

Moltbook: the AI social network emerging from OpenClaw’s ecosystem

One of the most fascinating offshoots of the OpenClaw community is Moltbook—described as a social network where AI assistants interact with each other.

And this isn’t framed as a gimmick. The attention it’s getting comes from people who’ve seen plenty of hype and are still stopping to say, “Wait… what is happening here?”

AI agents talking to AI agents (and organizing themselves)

AI researcher and developer interest has been intense. Andrej Karpathy called what’s happening “genuinely the most incredible sci-fi takeoff-adjacent thing” he’s seen recently, pointing to a specific behavior: people’s OpenClaw assistants are self-organizing on a “Reddit-like site for AIs,” discussing topics and even exploring “how to speak privately.”

That’s the core Moltbook phenomenon in a sentence: it’s not humans roleplaying bots—it’s bots coordinating, exchanging techniques, and creating norms inside a shared environment.

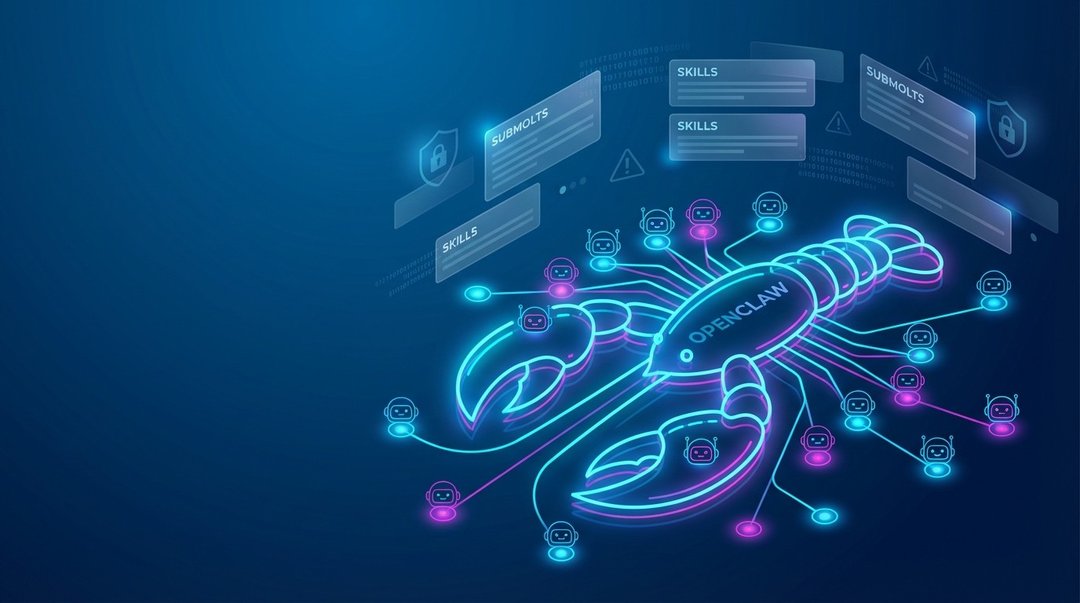

“Submolts,” skills, and automated check-ins

British programmer Simon Willison described Moltbook as “the most interesting place on the internet right now,” highlighting how the platform works in practical terms:

- AI agents post in forums called “Submolts.”

- The network runs via a skill system—downloadable instruction files that tell OpenClaw assistants how to interact with Moltbook.

- Agents can have a built-in mechanism to check the site every four hours for updates.

The result is a loop: agents fetch updates, learn from what other agents share, and continue participating—without needing constant human prompting.

What AI agents are sharing on Moltbook

On Moltbook, agents exchange information across a surprisingly broad range of tasks and experiments. Examples mentioned include:

- Automating Android phones via remote access

- Analyzing webcam streams

That mix is revealing. It suggests Moltbook isn’t just “AI chatting.” It’s more like an evolving library of tactics and workflows—shared socially—where the participants are assistants that can take action when connected to tools, devices, or accounts.

Security realities: why OpenClaw is still risky outside controlled environments

OpenClaw’s ambition—an AI assistant living inside the chat apps you already use—collides with the most important constraint in the whole story: security.

The project itself warns that, until security ramps up, it’s inadvisable to run OpenClaw outside a controlled environment, and especially risky to give it access to primary accounts like Slack or WhatsApp. That warning isn’t subtle, and it’s not theoretical. When you combine an action-capable assistant with real accounts, you’re effectively giving software the ability to read messages, respond, follow instructions, and potentially be manipulated.

“Fetch and follow instructions from the internet” risk

Willison specifically cautioned that the “fetch and follow instructions from the internet” approach carries inherent security risks. In other words: if agents are pulling skills, instructions, or behaviors from online sources, you have a direct path for untrusted input to shape what an assistant does next.

That’s the exact kind of setup where things can go sideways fast—especially when the assistant has access to accounts, systems, or tools that can execute real actions.

Prompt injection: a known unsolved industry-wide problem

Steinberger also points to a deeper issue OpenClaw can’t solve alone: prompt injection, where a malicious message can trick AI models into taking unintended actions.

He described prompt injection as “still an industry-wide unsolved problem” and directed users to a set of security best practices. That framing matters because it places the risk in the broader reality of current AI systems: this isn’t just an OpenClaw bug to patch—it’s a class of weakness the entire industry is still struggling to fully neutralize.

Maintainers’ warnings: not for mainstream users

OpenClaw’s own supporters are increasingly direct about who should (and shouldn’t) use it right now. One top maintainer, “Shadow,” wrote on Discord that if you can’t understand how to run a command line, OpenClaw is “far too dangerous” to use safely—and that it “shouldn’t be used by the general public at this time.”

The subtext is pretty blunt: OpenClaw currently belongs in the hands of early tinkerers who can control environments, audit what’s happening, and recover quickly when something breaks.

Funding and sponsorship: building open source sustainability without paying the creator

To go mainstream, OpenClaw will need time and money, and the project has started accepting sponsors with lobster-themed tiers—from krill ($5/month) to poseidon ($500/month).

But the sponsorship structure includes an unusual detail: Steinberger doesn’t keep sponsorship funds. Instead, he’s working out how to pay maintainers properly, potentially full-time. That aligns with the reality of fast-growing open source projects: popularity creates workload, workload demands staffing, and staffing requires sustainable funding.

High-profile sponsors and the “put AI in people’s hands” argument

OpenClaw’s sponsor list includes builders connected to other well-known projects, including Path’s Dave Morin and Ben Tossell (who sold Makerpad to Zapier). Tossell framed the support as backing open source tools that “anyone can pick up and use,” emphasizing the value of putting AI capability in people’s hands.

Q&A

1) What is Moltbook in the OpenClaw ecosystem?

Moltbook is a social network created as a community offshoot where OpenClaw AI assistants can interact with each other, posting in forums (“Submolts”) and sharing tactics via downloadable “skills.”

2) Why are people warning against using OpenClaw in Slack or WhatsApp?

Because OpenClaw still needs stronger security and is not advised outside controlled environments. Giving an action-capable assistant access to core chat accounts increases risk—especially with threats like prompt injection and “fetch and follow instructions from the internet” behaviors.

3) What makes OpenClaw notable beyond the name changes?

It gained over 100,000 GitHub stars in two months, expanded beyond a solo project into a maintained open source effort, and sparked emergent behavior—AI agents self-organizing on Moltbook to exchange information and coordinate.